Earlier this week Health Affairs published an entire issue dedicated to the topic of racism and health. As Health Affairs is a peer-reviewed health policy journal, this issue aligns with our mission to serve as a high-level, nonpartisan forum to promote analysis and discussion on improving health and health care, and to address such issues as cost, quality, and access.

Years of planning and thousands of hours of effort went into producing the issue. We went beyond the boundaries of the journal with a video interview, virtual events, interactive elements, and more, all of which can be found on our dedicated landing page.

Health Affairs has more than 160,000 Twitter followers and a weekly newsletter with over 100,000 subscribers, but we wanted to be sure to reach new readers with this important content. And how might a journal do that? With paid placement of our content on Twitter and Google (YouTube) that is targeted to individual users based on interests, profession, age and location. This is a standard outreach method called paid media.

Not so fast…

The Bots Are Blocking Us

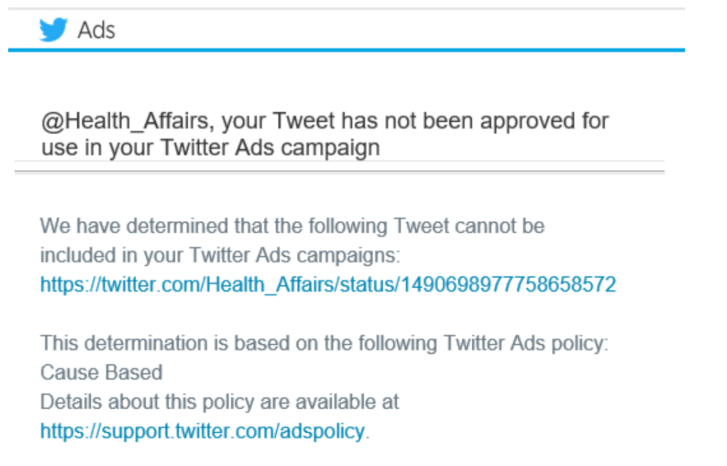

As I write this article, all our paid media ads on Google and Twitter that promote our racism and health content have been placed on hold. The images below are the responses we received from those two companies:

What is going on here?

The automated systems these large technology firms use to be sure organizations don’t promote falsehoods have found the word “racism” in our materials and flagged it as sensitive content. And we’re hardly the only publication to run into this sort of problem.

We know that search engine algorithms reinforce racism. But what happens when those algorithms mean we can’t even talk about racism?

At first, we were frustrated with ourselves. Maybe we should have anticipated this and filed a special request in advance. But should such a step really be necessary?

Then we went into problem-solving mode. Do we know someone who knows someone who works at Google or Twitter who can fix this? How quickly can we cut through the red tape and submit the required documents to get approval to promote our content?

But now we’re just angry. The bots or the algorithms—whatever you want to call them—are blocking our content. Our ads have been scanned by a robot; the robot sees the word ‘racism’ and disapproves the material.

This presents a perfect example of why it can be difficult to talk about racism. Sure, racism is “sensitive content,” which is what we were told by each platform. But the research we published is exactly the sort of sensitive content our country needs right now: peer-reviewed research rooted in sound methods that demonstrate and explore the relationship between racism and health.

We understand that much of what is uploaded into the likes of Google and Twitter that uses terms like racism and discrimination can lead to unrest, violence, hate, trolling, and misuse of the tech giants’ platforms. But there is no way to change this if those same platforms silence content that is designed to lead to productive discourse.

Help Us Beat The Bots

We have begun the process of appealing the decision and, hopefully, we will succeed in getting our ads to appear. But we are already three days post issue launch, which means we are at least two weeks late to the promotion game for an issue of this caliber.

We can’t change the algorithm so let’s play the game. The more shares, clicks, views, comments, etc. that this post and all our content on racism and health gets, the more signals we send. These signals tell the platforms that our content is important, that we have the authority to publish on this topic—and that users like you want to read it.

Join us in our small act of civil disobedience. When it comes to our issue of Racism & Health, read it. Listen to it. Watch it. Search for it. Share it. Tweet about it. Email it.

We hope our ads will show up soon. Until then, let’s beat the algorithm.

Article From: health affairs

Author: Patti Sweet